Annotation-Powered Questionnaires

We at Hypothesis have been working with the Credibility Coalition (CredCo) to help develop a set of indicators that bear on the credibility of news stories. Some of these indicators (“Has Clear Editorial Policy”) apply to publications, others (“Is Original”) to individual stories as a whole, and still others (“Uses Straw Man Argument”) to language within stories.

The indicators are being tested by teams of annotators. Because many of the indicators apply to selections within stories, we’re using Hypothesis to anchor indicators to selections. And we’ve built a custom Hypothesis-based survey tool around that anchoring capability.

Although this tool was created for a particular set of survey questions, it’s driven by a generic JSON format that can be reused for other purposes.

Here’s how the first question in the CredCo survey is defined:

'Q01': {

'type': 'radio',

'title': 'Overall Credibility',

'question': 'Rate your impression of the credibility of this article',

'choices': [

'1.01.01:Very low credibility',

'1.01.02:Somewhat low credibility',

'1.01.03:Medium credibility',

'1.01.04:Somewhat high credibility',

'1.01.05:High credibility',

],

'anchored': false,

},

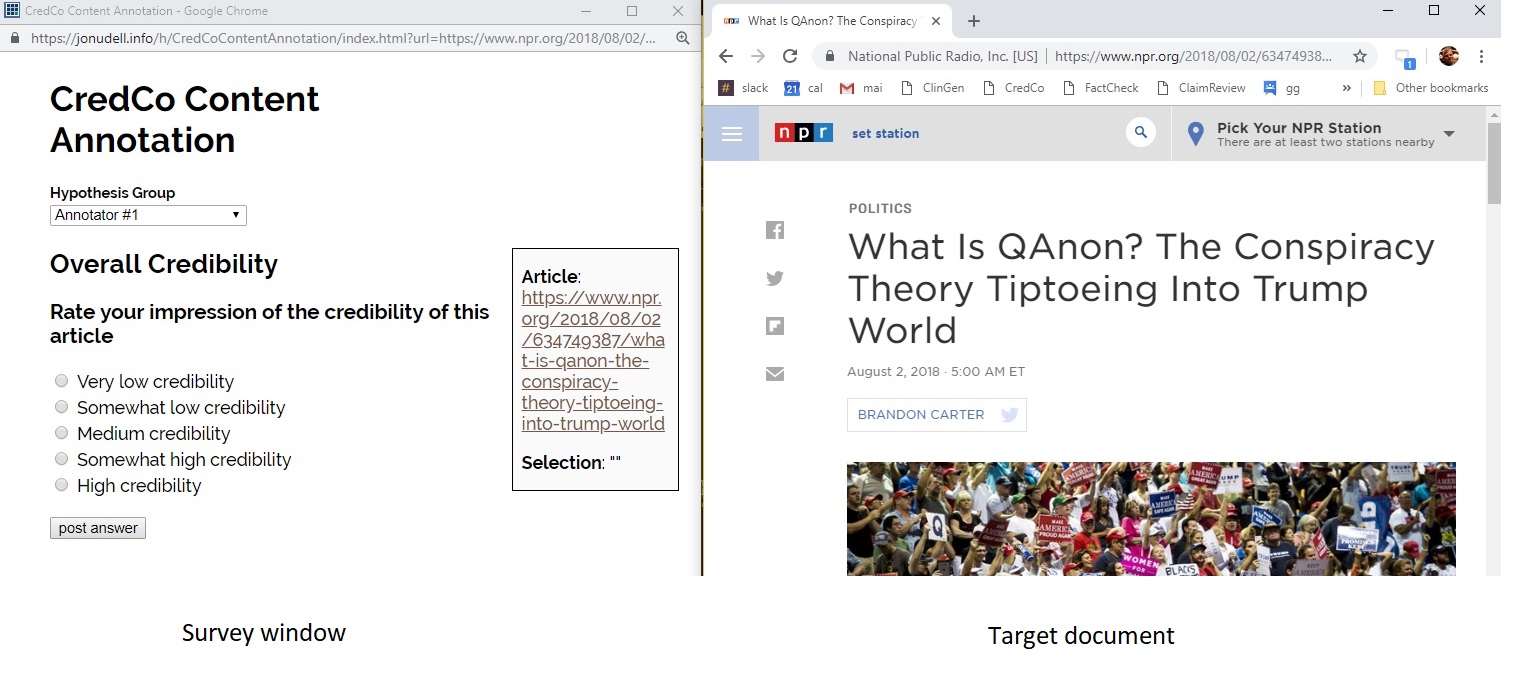

And here’s how that question is displayed.

The survey window (left) launches from the target document’s window (right) by way of a bookmarklet. Answers are posted to the annotation layer, so they’re visible on the target document and exportable by way of the Hypothesis API. For the CredCo project, which assigns several annotators to each document, it’s a requirement that annotators can’t see one another’s answers. We meet that requirement by placing each annotator into a private Hypothesis group (in this example, Annotator #1). The project coordinator, as the only other member of each of these per-annotator groups, can view and export everyone’s answers.

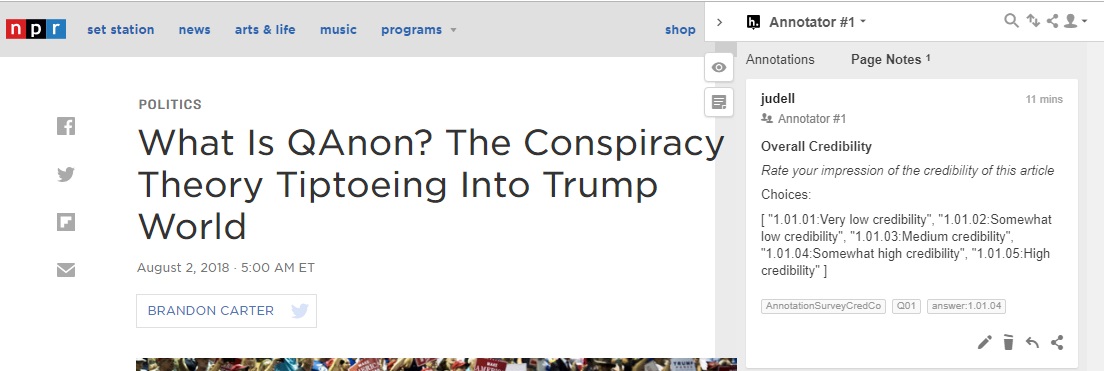

Here’s an answer to the first question:

For questions with defined sets of choices (i.e., those represented as sets of radio buttons or checkboxes), answers are posted as tags on annotations, which makes them conveniently navigable in the Hypothesis dashboard.

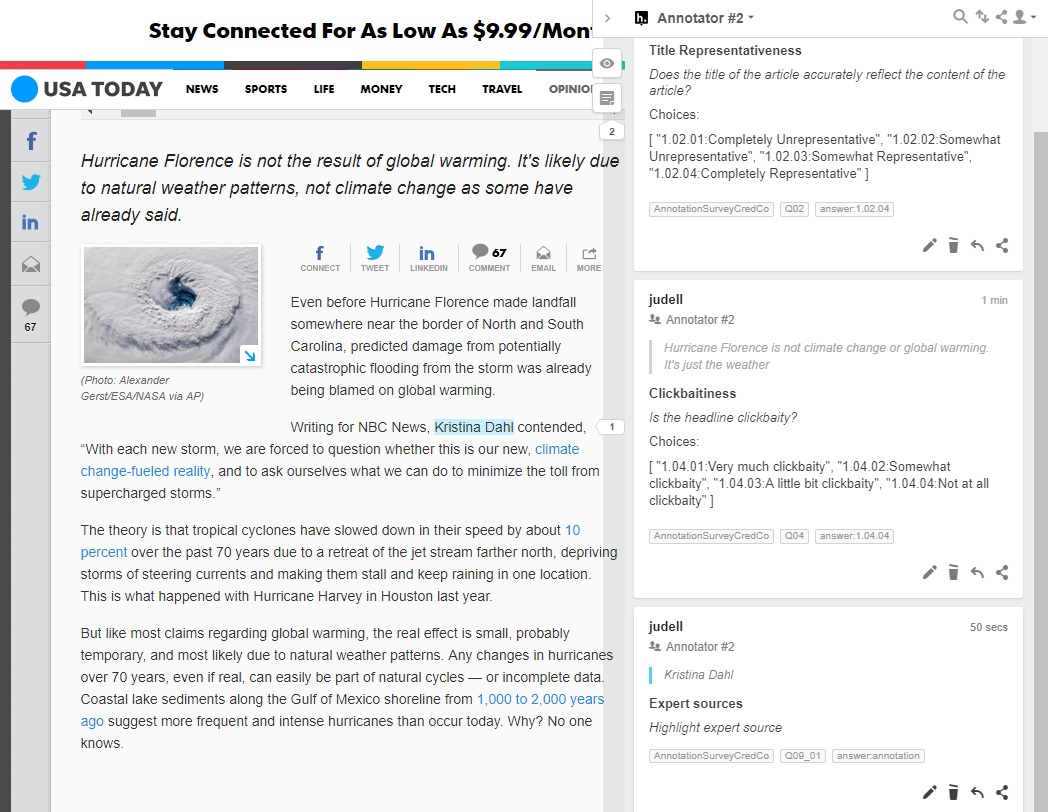

Radio buttons, checkboxes, and input boxes are the usual ways to answer survey questions. But what if the answer to a question is a selection in a document? For example, the CredCo survey asks annotators to highlight expert sources. Annotators do that by selecting text in the target document and sending it to the survey app, which posts an annotation that anchors to the selection. In this example, “Kristina Dahl” is one of the expert sources identified in a USA Today story on Hurricane Florence.

When the data are aggregated, the answer won’t just be “Kristina Dahl.” It will also encompass the identity of the document that contains the response, and the response’s location within that document. And it will be available for discussion in that context. Conversation in the margin of the document isn’t a requirement for CredCo’s qualitative research. But it could be for another research project, and certainly would be for a teacher who asks students to highlight examples of rhetorical strategies and wants to discuss their examples in context.